News

Apache Advances Kudu Columnar Storage Engine for Big Data

- By David Ramel

- July 25, 2016

The latest open source Big Data project to be advanced to top-level status by the Apache Software Foundation (ASF) is Kudu, a "columnar storage engine built for the Apache Hadoop ecosystem designed to enable flexible, high-performance analytic pipelines." The project reportedly fills in an architectural gap left open by other storage options, providing a missing piece to fill out the columnar storage puzzle.

One of many open source Big Data project championed by Hadoop distributor Cloudera Inc., Kudu provides another storage option for the Hadoop framework to complement the Hadoop Distributed File System (HDFS) and HBase, the company said in debuting the technology last fall before moving it to ASF as an incubating project.

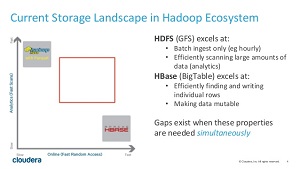

"Until now, developers have been forced to make a choice between fast analytics with HDFS or efficient updates with HBase," Cloudera said at the time. "Especially with the rise of streaming data, there has been a growing demand for combining the two features to build real-time analytic applications on changing data -- leading developers to create complex architectures with the storage options available. Kudu complements the capabilities of HDFS and HBase, providing simultaneous fast inserts and updates and efficient columnar scans. This powerful combination enables real-time analytic workloads with a single storage layer, eliminating the need for complex architectures."

[Click on image for larger view.]

Filling In the Gap Between HDFS and HBase (source: Cloudera)

[Click on image for larger view.]

Filling In the Gap Between HDFS and HBase (source: Cloudera)

In the ASF scheme of things, projects moved from the incubation stage to top-level status have demonstrated good governance under the organization's meritocratic process and principles. In being advanced to top-level status, Kudu enters a growing arena. It follows at least one other ASF columnar storage project, Apache Parquet (Cloudera again, with Twitter), which was moved up in April of last year. Another similar offering is Apache ORC, described as "the smallest, fastest columnar storage for Hadoop workloads." Cloudera earlier this year introduced Apache Arrow, "a fast, interoperable in-memory columnar data structure standard," in the hope that it becomes a de-facto reference for in-memory processing and interchange.

Along with those projects, Kudu has developed some momentum of its own.

"Under the Apache Incubator, the Kudu community has grown to more than 45 developers and hundreds of users," said Todd Lipcon, vice president of Apache Kudu and software engineer at Cloudera, in a news release today. "We are excited to be recognized for our strong open source community and are looking forward to our upcoming 1.0 release."

Earlier this month, Kudu was moved to version 0.9.1, according the Apache Kudu Blog, which posts weekly updates on the status of the project.

In anticipation of that 1.0 release mentioned by Lipcon (no timetable given), developers can get their hands on the source code in the form of a limited-functionality beta or a Kudu Quickstart Virtual Machine. To help with such early explorations of the technology, Kudu Developer Documentation is available on the GitHub source code repository.

Noting that Kudu was designed for "fast analytics on fast (rapidly changing) data," the project site states, "Kudu provides a combination of fast inserts/updates and efficient columnar scans to enable multiple real-time analytic workloads across a single storage layer. As a new complement to HDFS and Apache HBase, Kudu gives architects the flexibility to address a wider variety of use cases without exotic workarounds."

Before Kudu, such workarounds were required "when a use case requires the simultaneous availability of capabilities that cannot all be provided by a single tool," Cloudera's introductory blog post said. In such cases, "customers are forced to build hybrid architectures that stitch multiple tools together. Customers often choose to ingest and update data in one storage system, but later reorganize this data to optimize for an analytical reporting use-case served from another."

Kudu, with optimization for fast scanning, is especially useful for tasks such as hosting time-series data (a growing use case with the burgeoning Internet of Things, or IoT) and different kinds of operational data, today's news release said, noting that it's already being used in the retail, online service delivery, risk management and digital advertising industries.

Touting a "bring your own SQL" philosophy, Kudu can be accessed from various different query engines, such as the Apache projects Drill, Spark and Impala. The latter is another Cloudera-championed project that can work with Kudu and which will possibly itself move from incubation to top-level status.

"The Internet of Things, cybersecurity and other fast data drivers highlight the demands that real-time analytics place on Big Data platforms," said Arvind Prabhakar, ASF member and CTO of StreamSets, in today's announcement. "Apache Kudu fills a key architectural gap by providing an elegant solution spanning both traditional analytics and fast data access. StreamSets provides native support for Apache Kudu to help build real-time ingestion and analytics for our users."

About the Author

David Ramel is an editor and writer at Converge 360.