News

Google Updates Tango Tool for Mobile Augmented Reality

- By David Ramel

- August 17, 2016

Augmented reality is a hot new topic in the mobile development world, thanks to its use in the world's most popular game, Pokémon Go.

Capitalizing on that popularity, Google is publicizing an update to Tango, its mobile vision technology platform.

Specifically, the company has updated its Tango Unity SDK with new environmental lighting functionality, said Google's Sean Kirmani in a recent blog post titled "Adding a bit more reality to your augmented reality apps with Tango."

"Augmented reality scenes, where a virtual object is placed in a real environment, can surprise and delight people whether they're playing with dominoes or trying to catch monsters," Kirmani said. "But without support for environmental lighting, these virtual objects can stick out rather than blend in with their environments. Ambient lighting should bleed onto an object, real objects should be seen in reflective surfaces, and shade should darken a virtual object.

"Tango-enabled devices can see the world like we do, and they're designed to bring mobile augmented reality closer to real reality. To help bring virtual objects to life, we've updated the Tango Unity SDK to enable developers to add environmental lighting to their Tango apps."

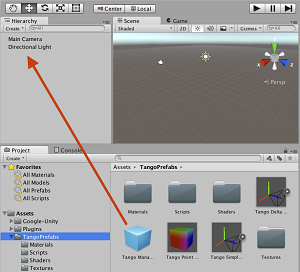

[Click on image for larger view.]

Using Tango Prefabs with Unity (source: Google)

[Click on image for larger view.]

Using Tango Prefabs with Unity (source: Google)

Tango actually provides capabilities that go beyond the narrow use of augmented reality in Pokémon Go.

As explained on its Web site: "Tango is a platform that uses computer vision to give devices the ability to understand their position relative to the world around them. It's just like how you use your eyes to find your way to a room, to know where in the room you are, and know where the floor, the walls, and objects around you are. These physical relationships are an essential part of how we move through our daily lives. We want to give mobile devices this kind of understanding."

To do that, Tango uses three core technologies, described by the company as:

-

Motion tracking: This allows a device to understand position and orientation using Tango's custom sensors. It provides real-time information about the 3D motion of the device.

- Area learning: Tango devices can use visual cues to help recognize the world around them. They can self-correct errors in motion tracking and relocalize in areas they've seen before.

- Depth perception: Depth sensors can tell you the shape of the world around you. Understanding depth lets your virtual world interact with the real world in new ways.

Those capabilities come with the $512 Tango tablet development kit, consisting of a device with a wide-angle camera, a depth-sensing camera, sensor timestamping capabilities and a software stack providing the three core technologies listed above. Although the kit features a tablet, Google said the Lenovo Phab 2 Pro is the first smartphone supporting Tango.

The kit can be used with the Tango Unity SDK (which just received the environmental lighting functionality) or with Java and C APIs, the latter for developers working with the Android NDK.

"The Unity SDK is great for making games and other programs requiring 3D visualization if you don't already have an existing or preferred rendering engine," Google says on its Web site. "We provide scripts, components, prefabs and demo programs in our Unity packages."

Tango technology is already featured in many Google Play apps (sometimes under its former name, Project Tango), such as: Tango MeasureIt, which lets users measure real-world object with their device; Tango Constructor, which lets developers record and view 3D models; and Tangosaurus, which demonstrates the technical capabilities of Tango, such as motion tracking, depth perception, and (optionally) area learning. Many more apps are available, some from third-party developers that prominently feature augmented reality.

More information is available on the Tango Developer Overview, and interested developers can also play around with augmented reality example code on GitHub.

About the Author

David Ramel is an editor and writer at Converge 360.