News

Data Scientists Worry About Human Bias in Machine Learning, AI-Based Warfare

- By David Ramel

- April 20, 2017

Data scientists are a happy bunch overall, but they do worry about ethical issues such as human bias and prejudice being programmed into machine learning (ML) and the use of artificial intelligence (AI) and automation in warfare and intelligence gathering.

That's a finding in the new "2017 Data Scientist Report" just published by AI specialist CrowdFlower Inc.

"Read any article on AI (and there is no shortage) and shortly behind, you'll likely find mention of ethical issues," the report said. "From the White House to the Wall Street Journal to the World Economic Forum, the question of how we program the future is one of the most critical issues facing not just data scientists but society as a whole. In perhaps the most important question in this year's survey, we asked, 'Which of the following do you personally think might be issues regarding ethics and AI?' "

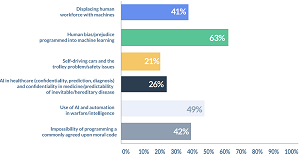

The top concern raised in answering that question was "human bias/prejudice programmed into machine learning" (listed by 63 percent of respondents), followed by "use of AI and automation in warfare/intelligence" (49 percent).

"Unease on the displacement of human workforces and the impossibility of programming a commonly agreed upon moral code also ranked high on the radar of ethical issues for data scientists tallying in at 41 percent and 42 percent respectively," CrowdFlower said.

[Click on image for larger view.]

Issues Regarding Ethics and AI (source: CrowdFlower)

[Click on image for larger view.]

Issues Regarding Ethics and AI (source: CrowdFlower)

Other answers included "AI in healthcare (confidentiality, prediction, diagnosis) and confidentiality in medicine/predictability of inevitable/hereditary disease" (26 percent) and "self-driving cars and the trolley problems/safety issues" (21 percent).

CrowdFlower surveyed 179 data scientists around the world in February and March. They worked for organizations ranging from those with fewer than 100 employees to those with more than 10,000 in a variety of industries, with 40 percent coming from "technology" organizations.

Despite the ethical concerns surrounding the growing use of ML and AI in their field, data scientists reported they were happy being in a profession that has been called the "sexiest job of the 21st century."

[Click on image for larger view.]

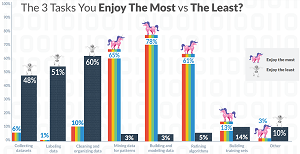

Job Task Likes, Dislikes (source: CrowdFlower)

[Click on image for larger view.]

Job Task Likes, Dislikes (source: CrowdFlower)

"64 percent of data scientists agree that they are working in this century's sexiest job (but 3 percent would rather be rockstars)," the report said.

They are also still in high demand, and enterprises lucky enough to have them on staff should probably be worried about that.

"Nearly 90 percent of data scientists (89 percent) are contacted at least once a month for new job opportunities, over 50 percent are contacted on a weekly basis, and 30 percent report being contacted several times a week," the report said.

Other highlights of the company's latest edition of its continuing report series include:

- Most enjoyable tasks are building and modeling data (78 percent), mining data for patterns (65 percent) and refining algorithms (61 percent).

- Least enjoyable tasks are cleaning and organizing data (60 percent), labeling data (51 percent) and collecting datasets (48 percent).

- In a "would you rather" question with three alternative answers, the top choice was "accidentally delete all your ML code (with no backup)," listed by 52 percent of respondents, followed by "break a leg" (28 percent) and "accidentally delete all of your training data (with no backup)" at 21 percent.

- The biggest bottleneck in running successful AI/ML projects is "improving the quality of or getting good training data" (51 percent), followed by "deploying your ML model into production" (29 percent) and "delivering accurate ML models (9 percent).

- 36 percent believe they'll take their first ride in a self-driving car within three to five years. 26 percent think that will be within two years. 2 percent already have.

"In summary, if 2016 was the year of the algorithm, we're proclaiming 2017 the year of data -- training data to be precise," CrowdFlower concluded. "Data scientists are spending more than half their time labeling and creating it, they value it over machine learning code (and unbroken legs), it's decidedly determined to come 'before the algorithm' and -- most importantly -- its integrity is key to providing unbiased models as AI starts to drive our future.

"Despite the fact that zero percent of data scientists predict they'll be dealing with less data in 2017, quality levels are less predictable and lack of access to high quality training data is the single biggest reason AI projects fail. Given the massive proliferation of AI projects in virtually every sector across the globe, data scientists must work to offload routine work and streamline processes in the face of increasing data, increasing AI projects and a continued shortage of those with the necessary skills."

About the Author

David Ramel is an editor and writer at Converge 360.