News

IBM Provides Deep Learning as a Service

- By David Ramel

- March 20, 2018

The latest effort to make artificial intelligence (AI) programming more accessible to developers in the face of an industry-wide skills shortage comes from IBM, which today announced Deep Learning as a Service.

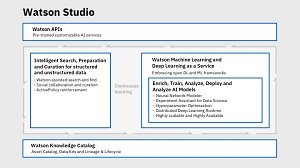

The new service comes with Watson Studio, a cloud-native set of tools that provide an environment for building, training and deploying AI models that work with various types of data.

Furthermore, IBM said it's open sourcing a core component of the new service, called the Fabric for Deep Learning (or FfDL, pronounced "fiddle"), which is now on GitHub.

Industry experts have predicted AI will be the most disruptive technology over the next decade, and numerous efforts are underway to get AI programming power into the hands of the developer masses because of a lack of specialized AI skills.

For example, Alteryx Inc., a data science and analytics software maker based in Irvine, Calif., this month released Alteryx Promote, a component of the Alteryx Analytics platform, which it says "allows both data scientists and citizen data scientists to deploy predictive models directly into business applications through an API."

In comparison, IBM's new offering also uses the RESTful API approach, following the familiar "as-as-service" mechanism (IBM calls it DLaaS) to ease the burden of refining data, training neural network models and creating deep learning models.

[Click on image for larger view.]

DLaaS in Watson Studio (source: IBM)

[Click on image for larger view.]

DLaaS in Watson Studio (source: IBM)

"Drawing from advances made at IBM Research, Deep Learning as a Service enables organizations to overcome the common barriers to deep learning deployment: skills, standardization, and complexity," IBM said in a post today (March 20). "It embraces a wide array of popular open source frameworks like TensorFlow, Caffe, PyTorch and others, and offers them truly as a cloud-native service on IBM Cloud, lowering the barrier to entry for deep learning. It combines the flexibility, ease-of-use, and economics of a cloud service with the compute power of deep learning. With easy to use REST APIs, one can train deep learning models with different amounts of resources per user requirements, or budget."

While just announced today, the service has been in the works for a while, as IBM in July 2017 published the "IBM Deep Learning Service" paper.

That research paper, provided as a PDF, says: "These two trends, deep learning and 'As-a-Service,' are colliding to give rise to a new business model for cognitive application delivery: deep learning as a service in the cloud."

IBM outlined several advances its research team has made to enable the new service, including:

- Training in the cloud

- Automating the parameters of neural networks

- A dashboard for checking experiments

- Visual programming for deep learning

Speaking of those advances, IBM said in a separate post: "Deep learning and machine learning require expensive hardware and software resources as well as more expensive skilled scientists and developers. Deep learning, in particular, requires users to be experts at different levels of the stack, from neural network design to new hardware. Allowing them to be more effective requires cross-stack innovation and software/hardware co-design."

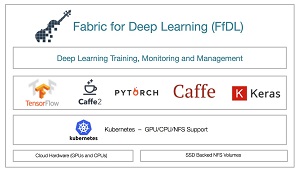

[Click on image for larger view.]

Fabric for Deep Learning (source: IBM)

[Click on image for larger view.]

Fabric for Deep Learning (source: IBM)

IBM is also looking to the community for help in furthering those advances, centered on the aforementioned open source Fabric for Deep Learning (FfDL).

The company describes FfDL as a collaboration platform for:

- Framework-independent training of deep learning models on distributed hardware

- Open deep learning APIs

- Common instrumentation

- Running deep learning hosting in user's private or public cloud

"Leveraging the power of Kubernetes, FfDL provides a scalable, resilient, and fault-tolerant deep-learning framework," IBM said. "The platform uses a distribution and orchestration layer that facilitates learning from a large amount of data in a reasonable amount of time across compute nodes. A resource-provisioning layer enables flexible job management on heterogeneous resources, such as GPUs and CPUs on top of Kubernetes."

Jim Zemlin, executive director of The Linux Foundation, weighed in on IBM's open sourcing of FfDL.

"Just as The Linux Foundation worked with IBM, Google, Red Hat and others to establish the open governance community for Kubernetes with the Cloud Native Computing Foundation, we see IBM's release of Fabric for Deep Learning, or FfDL, as an opportunity to work with the open source community to align related open source projects, taking one more step toward making deep learning accessible," Zemlin said. "We think its origin as an IBM product will appeal to open source developers and enterprise end users."

About the Author

David Ramel is an editor and writer at Converge 360.