News

Google Open Sources Tool for Running C Code in Hadoop

- By David Ramel

- February 27, 2015

The venerable C programming language isn't going away, as shown by Google's open sourcing of a framework to let Big Data devs run native C code in Hadoop: MapReduce for C.

"MR4C is an implementation framework that allows you to run native code within the Hadoop execution framework," the project's GitHub page states. "Pairing the performance and flexibility of natively developed algorithms with the unfettered scalability and throughput inherent in Hadoop, MR4C enables large-scale deployment of advanced data processing applications."

In a blog post, Ty Kennedy-Bowdoin explained the framework was created by Skybox Imaging, a company acquired by Google that works with data from orbiting satellites to build analytics solutions.

"MR4C was originally developed at Skybox Imaging to facilitate large scale satellite image processing and geospatial data science," Kennedy-Bowdoin said last week. "We found the job tracking and cluster management capabilities of Hadoop well-suited for scalable data handling, but also wanted to leverage the powerful ecosystem of proven image processing libraries developed in C and C++."

[Click on image for larger view.]

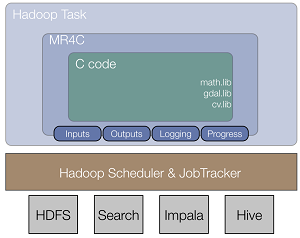

How MapReduce for C Works (source: Google)

[Click on image for larger view.]

How MapReduce for C Works (source: Google)

The project's goal, he said, is to abstract the important aspects of MapReduce -- an oft-maligned original Hadoop component focused on batch processing that has seen numerous projects attempt to improve upon its capabilities to meet the modern demands of streaming, real-time analytics.

Abstracting some details, Kennedy-Bowdoin said, will help developers focus on the more important stuff, like creating useful algorithms.

"MR4C is developed around a few simple concepts that facilitate moving your native code to Hadoop," he said. "Algorithms are stored in native shared objects that access data from the local filesystem or any uniform resource identifier (URI), while input/output datasets, runtime parameters, and any external libraries are configured using JavaScript Object Notation (JSON) files.

"Splitting mappers and allocating resources can be configured with Hadoop YARN-based tools or at the cluster level for MRv1 [MapReduce version 1]," he continued. "Workflows of multiple algorithms can be strung together using an automatically generated configuration. There are callbacks in place for logging and progress reporting which you can view using the Hadoop JobTracker interface. Your workflow can be built and tested on a local machine using exactly the same interface employed on the target cluster."

The GitHub site says the project comes with four scripts to build, clean, deploy or remove the code. It's been tested on Ubuntu 12.04 and CentOS 6.5 Linux OSes, and on the Cloudera CDH Apache Hadoop distribution.

"While many software companies that deal with large datasets have built proprietary systems to execute native code in MapReduce frameworks, MR4C represents a flexible solution in this space for use and development by the open source community," Kennedy-Bowdoin said.

About the Author

David Ramel is an editor and writer at Converge 360.