News

Machine Learning Powers New iOS Developer Functionality

- By David Ramel

- June 6, 2018

Artificial intelligence (AI) breakthroughs continue to be infused in developer tooling, with a new machine learning framework for the upcoming iOS 12 being the latest example.

At the ongoing 2018 Apple Worldwide Developers Conference in San Jose, Calif., the company introduced the new Core ML 2 framework, which can be used by developers in a variety of ways across a variety of applications on Apple's flagship mobile OS, available now in preview to members of the $100-per-year Apple Developer Program and later this month in a public beta and coming to all in a fall device software update.

Apple said the framework allows for easy integration of machine learning models, helping developers build intelligent apps with a minimum of code.

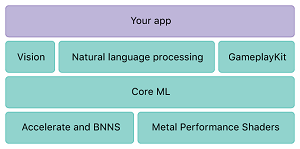

"In addition to supporting extensive deep learning with over 30 layer types, it also supports standard models such as tree ensembles, SVMs, and generalized linear models," Apple said. "Because it's built on top of low level technologies like Metal and Accelerate, Core ML seamlessly takes advantage of the CPU and GPU to provide maximum performance and efficiency. You can run machine learning models on the device so data doesn't need to leave the device to be analyzed."

[Click on image for larger view.]

Core ML (source: Apple)

[Click on image for larger view.]

Core ML (source: Apple)

In its developer documentation, Apple said Core ML's optimization for on-device performance minimizes its memory footprint and power consumption, while also ensuring the privacy of user data and guaranteeing apps remain functional and responsive even in the absence of network connectivity.

Some of the Apple products that already leverage the machine learning power of Core ML 2 include Siri, Camera, and QuickType, using features such as computer vision. That machine learning functionality enables face tracking and detection, landmarks, text detection and more. Also the new Natural Language framework can be used to analyze natural language text and deduce its language-specific metadata. Another component, GameplayKit, can be used to evaluate learned decision trees.

While Core ML 2 lets developers process models more quickly than before, along with making them smaller, the brand-new Create ML framework was also introduced to facilitate the building of such models and other tasks, such as training and deploying custom natural language processing (NLP) models.

The power of AI was also put to use in Apple's augmented reality tooling, specifically ARKit 2, which was also introduced at the conference along with ML Core 2 and other new products.

Apple also introduced:

- The Xcode 10 beta, which includes the SDKs for iOS 12, watchOS 5, tvOS 12, and macOS Mojave.

- Siri Shortcuts: "Siri can now intelligently pair users' daily routines with your apps to suggest convenient shortcuts right when they're needed. Use the Shortcuts API to help users quickly accomplish tasks related to your app, directly from the lock screen, in Spotlight, or from the Siri watch face."

- An all-new Mac App Store: "The reimagined Mac App Store arrives with a new look and new editorial content that inspires and informs. Organized around the specific things customers love to do on Mac, along with insightful stories, curated collections, and videos, the Mac App Store on macOS Mojave beautifully showcases your apps and makes them even easier to find."

- Updated App Store Review Guidelines: "We review all apps submitted to the App Store in an effort to determine whether they are reliable, perform as expected, and are free of offensive material."

More developer information on using Core ML 2 can be found in the Core ML Framework Reference and the guidance on Integrating a Core ML Model into Your App. All the news coming from the WWDC, which concludes Friday, can be found here.

About the Author

David Ramel is an editor and writer for Converge360.