News

Researchers Warn of Insecure Online Coding Advice

- By David Ramel

- January 9, 2019

Several studies have linked the use of open source software and tutorials with the introduction of security vulnerabilities in production code, but a new one finds the same problem with online coding advice.

Academic researchers examined the security implications of using "crowdsourced knowledge," specifically that found on the Stack Overflow site, a Q&A resource for developers. That resulted in the recent publication of "How Reliable is the Crowdsourced Knowledge of Security Implementation?" by researchers from Virginia Tech, Technical University of Munich and University of Texas at San Antonio. One main takeaway from the paper: Soliciting and using SO answers can leave developers "wandering around alone in a software security minefield."

The study follows other efforts that found popular production apps riddled with security vulnerabilities introduced by using open source software packages or by the copy/pasting of insecure tutorial code into such apps, for just two of many examples.

In this case, the researchers examined SO and its mechanism of coders looking for help by soliciting advice in a post and then choosing or "accepting" the best answer, with all proposed answers subject to upvotes/downvotes and commentary by other users.

[Click on image for larger view.]

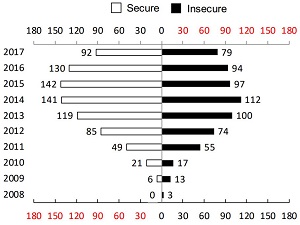

The Distribution of Secure/Insecure SO Posts 2008-2017 (source: Research Paper)

[Click on image for larger view.]

The Distribution of Secure/Insecure SO Posts 2008-2017 (source: Research Paper)

They sought in part to answer two broad questions:

- How much can we trust the security implementation suggestions on SO?

- If suggested answers are vulnerable, can developers rely on the community's dynamics to infer the vulnerability and identify a secure counterpart?

The answers are basically "not much" and "no."

"Our findings show that based on the distribution of secure and insecure code on SO, users being laymen in security rely on additional advice and guidance," the report states. "However, the community-given feedback does not allow differentiating secure from insecure choices. The reputation mechanism fails in indicating trustworthy users with respect to security questions, ultimately leaving other users wandering around alone in a software security minefield."

Recommendations to address the problem include:

- Tool builders should explore approaches that accurately and flexibly detect and fix security bugs.

- SO developers should integrate static checkers to scan existing corpus and SO posts under submission and automatically add warning messages or special tags to any post that has vulnerable code.

- Designers of crowdsourcing platforms should provide incentives to users for downvoting or detailing vulnerabilities and suggesting secure alternatives, while also introducing mechanisms to encourage owners of outdated or insecure answers to proactively archive or close them.

"When developers refer to crowdsourced knowledge as one of the most important information resources, it is crucially important to enhance the quality control of crowdsourcing platforms," the paper concludes. "This calls for a strong collaboration between developers, security experts, tool builders, educators, and platform providers. By educating developers to contribute high-quality security-related information, and integrating vulnerability and duplication detection tools into platforms, we can improve software quality via crowdsourcing. Our future work is focused on building the needed tool support."

About the Author

David Ramel is an editor and writer at Converge 360.