News

Analyst: Hadoop-as-a-Service Can Help Tame Notorious Management Complexity

- By David Ramel

- December 7, 2015

Typical enterprise Hadoop distributions rely on a lot of moving parts -- leveraging up to 20 or more disparate components -- which leads to management complexity and total cost of ownership (TCO) issues, according to a recent research note from Wikibon analyst George Gilbert, who proposed Hadoop-as-a-Service (HaaS) as one way to address some of the attendant concerns.

Be warned, however, that Gilbert doesn't see HaaS as a panacea for manageability challenges, just perhaps the best of several potential solutions that each come with advantages, disadvantages and trade-offs. He does, however, tout it as a viable option for companies building public cloud-based Web and mobile applications that rely on on-premises Hadoop back-ends.

"Prospective customers as well as those who are still in proof-of-concept or pilot need to understand that there are no easy solutions," Gilbert said in his Nov. 24 "The Manageability Challenge Facing Hadoop" research note for Wikibon, which describes itself as "an open community where you can read, write and collaborate on a range of topics," featuring contributions from writers, experts, practitioners, users, vendors and researchers.

Gilbert described the trade-offs of three proposed alternative solutions to solving the TCO and manageability problem:

- Running Hadoop-as-a-Service.

- Using Spark as the computing core of Hadoop.

- Building on the native services of the major cloud vendors such as Amazon Web Services (AWS) (Kinesis Firehose, DynamoDB, Redshift and more), Microsoft Azure, or Google Cloud Platform while integrating specialized third-party services such as Redis.

Spark, the current darling of the Big Data ecosystem -- described as the most active open source project -- might seem to be the logical choice for enterprise Big Data application development, but it doesn't ingest data, manage data or come with a database or file system. Cassandra is a popular choice to fill that latter role, Gilbert said. Spark also needs a service "to make sure a majority of the other services are live and talking to each other," Gilbert said, such as Zookeeper, which is described as "a centralized service for maintaining configuration information, naming, providing distributed synchronization, and providing group services." Kafka, meanwhile is becoming the de facto standard for Big Data ingestion. And, of course, Spark lacks a management console.

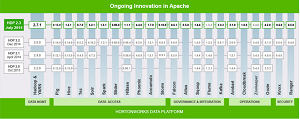

[Click on image for larger view.]

Individual Projects in the Hortonworks Data Platform. (source: Wikibon via Hortonworks)

[Click on image for larger view.]

Individual Projects in the Hortonworks Data Platform. (source: Wikibon via Hortonworks)

"So getting up and running with a Spark cluster takes no less than 12 servers: three for each of the services," Gilbert said. "Again, even though Spark is a single, unified processing engine, it requires at least four different services. And that's where the management complexity comes back into play. Each service has its own way of failing; its own way of managing access; its own attack surface; and its own admin model."

Another option, relying on native cloud services from providers such as AWS, Azure and Google Cloud Platform, can provide dramatic manageability gains, but that approach involves its own trade-offs, such as limited choice and lack of open source portability.

"All the cloud providers will provide ever more powerful DevOps tools to simplify development and operations of applications on their platforms," Gilbert said. "But as soon as customers want the ability to plug in specialized third-party functionality, that tooling will likely break down. The overhead of opening up these future tools is far more difficult than building them knowing in advance just what services they'll be managing."

That leaves the managed HaaS service option, in which AWS also figures with its Elastic MapReduce (EMR) service, but it apparently suffers from the separation of compute and storage resources favored by AWS, which increases management complexity, among other trade-offs.

Another HaaS vendor, Altiscale, simplifies Hadoop management with a purpose-built, proprietary hardware/software infrastructure. By being familiar with system internals, Altiscale can make Hadoop management less labor intensive, said Gilbert, who added the caveat: "Of course, customers have to get their data to the datacenters that host Altiscale, and they don't have the rich ecosystem of complementary tools on AWS."

So, with no "perfect" solution available for all scenarios, Gilbert offered the following concluding "action item" with which Wikibon research notes end:

Customers building their outward facing Web and mobile applications on public clouds while trying to build Hadoop applications on-premises should evaluate vendors offering it as-a-service. Hadoop already comes with significant administrative complexity by virtue of its multi-product design. On top of that, operating elastic applications couldn't be more different from the client-server systems IT has operated for decades.

"Hadoop's unprecedented pace of innovation comes precisely because it is an ecosystem, not a single product," Gilbert said. "Total cost of ownership and manageability have to change in order for 'Big Data' production applications to go mainstream. And if the Hadoop ecosystem doesn't fix the problem, there are alternatives competing for attention."

About the Author

David Ramel is an editor and writer at Converge 360.