News

Hortonworks Partners with Concurrent for Hadoop Development

- By David Ramel

- April 23, 2014

Hortonworks Inc. and Concurrent Inc. announced this week they are partnering to make Hadoop development easier and quicker by combining the former's data platform with the latter's Cascading application development framework.

As part of the expanded partnership, Hortonworks, a leading force in Hadoop development, will certify, support and include the open source Cascading SDK with the Hortonworks Data Platform (HDP). Also, future Cascading releases will support Apache Tez, an alternative to the original MapReduce programming model used with Hadoop that has often been criticized for a slow, non-interactive, batch-processing model. Tez supports more interactive queries, faster response times and extreme throughput at huge scales.

"Users of Cascading will now be able to rapidly build data-centric applications that take advantage of the Tez providing users with highly interactive operational applications on top of Hadoop," said John Kreisa in a Hortonworks blog post announcing the partnership.

[Click on image for larger view.]

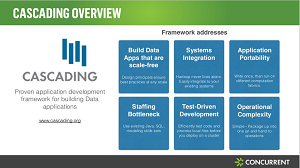

The Cascading application development framework

[Click on image for larger view.]

The Cascading application development framework

(source: Concurrent Inc.)

Kreisa claimed that Cascading is "the most widely used application development framework for data applications on Hadoop." He said Hortonworks will guarantee "the ongoing compatibility of Cascading-based applications across future releases of HDP with continuous compatibility testing and direct HDP customer support for Cascading." The companies will team up to provide different levels of support to Cascading users.

Hortonworks and Concurrent yesterday presented a webinar in which they described how to accelerate Big Data development with Cascading and HDP.

In the webinar, Concurrent executive Supreet Oberoi explained the genesis of the Cascading framework produced by his company, which was founded in 2008 by Chris Wensel, a pioneer in the Hadoop phenomenon who started the first Silicon Valley meetup for the budding technology.

Wensel thought Hadoop was powerful, Oberoi said, but he saw some challenges with the technology.

"The first one was that people who are used to developing data applications, they think in terms of business objects, and making them think in terms of maps and reduce is unintuitive," Oberoi said. "The second point is that even though these APIs were written in Java, the level of complexity will preclude many of the existing Java community developers from using those APIs."

The third, Oberoi continued, was the realization that different use cases require different execution fabrics, and being coupled to one prevented developers from using better or more appropriate technologies that might come along in the future. With those reasonings, Wensel developed the Cascading API.

"As a Java-based framework, Cascading fits naturally into JVM-based languages, including Scala, Clojure, JRuby, Jython and Groovy," noted this site's editor at large John K. Waters in a blog post last winter. "And the Cascading community has created scripting and query languages for many of these languages."

Jules S. Damji explained in a Hortonworks blog post Monday that Wensel developed the API "with the sole purpose of enabling developers to write enterprise big data applications without the know-how of the underlying Hadoop complexity and without coding directly to the MapReduce API. Instead, he implemented high-level logical constructs, such as Taps, Pipes, Sources, Sinks, and Flows, as Java classes to design, develop, and deploy large-scale big data-driven pipelines."

Damji further explained how to get started with Hadoop and Cascading with some simple examples and pointed developers to this tutorial for more detailed information.

About the Author

David Ramel is an editor and writer at Converge 360.